Fang Fang and Nihong Chen at the PKU-IDG/McGovern Institute for Brain Research, Department of Psychology and Center for Life Sciences at Peking University have recently published a paper on PNAS (Proceedings of the National Academy of Sciences of the United States of America) titled ‘Perceptual learning modifies the functional specializations of visual cortical areas’ with their collaborators. They prove that perceptual learning in visually normal adults shapes the functional architecture of the brain in a much more pronounced way than previously believed.

Training can improve performance of perceptual tasks. This phenomenon, known as perceptual learning, is strongest for the trained task and stimulus, leading to a widely accepted assumption that the associated neuronal plasticity is restricted to brain circuits that mediate performance of the trained task. Nevertheless, learning does transfer to other tasks and stimuli, implying the presence of more widespread plasticity.

|

|

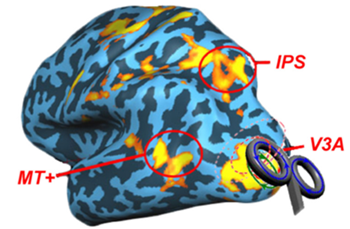

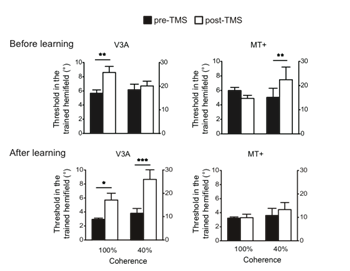

In their PNAS paper, Chen, Cai, Zhou, Thompson and Fang used TMS (trancranial magnetic stimulation) and fMRI (functional magnetic resonance imaging) techniques, to demonstrate that the transfer of perceptual learning from a task involving coherent motion to a task involving noisy motion can induce a functional substitution of V3A (an area in extratriate visual cortex) for MT+ (middle temporal/medial superior temporal cortex) to process noisy motion.

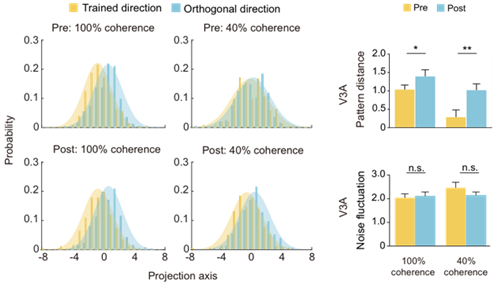

In the study, human subjects were trained to discriminate the direction of coherent motion stimuli. Their behavioral learning effect substantially transferred to noisy motion stimuli. The TMS experiment revealed dissociable, causal contributions of V3A and MT+ to coherent and noisy motion processing. Surprisingly, the contribution of MT+ to noisy motion processing was replaced by V3A following perceptual training. The fMRI experiment complemented and corroborated the TMS finding. Multivariate pattern analysis showed that, before training, among visual cortical areas, coherent and noisy motion was decoded most accurately in V3A and MT+, respectively. After training, both kinds of motion were decoded most accurately in V3A.

These findings demonstrate that the effects of perceptual learning extend far beyond the retuning of specific neural populations that mediate performance of the trained task. Learning could dramatically modify the inherent functional specializations of visual cortical areas and dynamically reweight their contributions to perceptual decisions based on their representational qualities.

The study was supported by Ministry of Education, NSFC, PKU-IDG/ McGovern Institute for Brain Research and the Peking-Tsinghua Center for Life Sciences.